My growing pile of posts on Substack. My posts are organized into three sections

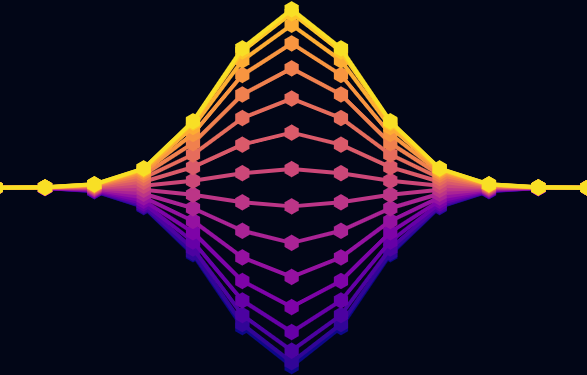

Data science, unpacked

Explore core concepts in data science and applied math through clear explanations, equations, and hands-on code. Each post unpacks a single topic, ranging from correlation and covariance to Fourier transforms, fractals, and neural simulations, translating between theory and Python implementation. Every post comes with a Python notebook so you can reproduce the results, experiment with the methods, and apply them to your own projects.

Each post is 1000-2000 words and takes 5-10 minutes to read (could take longer if you go through all the code in depth).

Each post is 1000-2000 words and takes 5-10 minutes to read (could take longer if you go through all the code in depth).

My goal in each post is to clarify points of confusion or ambiguity that people often have with commonly used methods, or comparing different-but-similar methods.

All of the code files for the posts are on my Github site.

- Correlation vs. cosine similarity

- The Fourier transform, explained with for-loops

- Bootstrapping confidence intervals

- Nuances of softmax

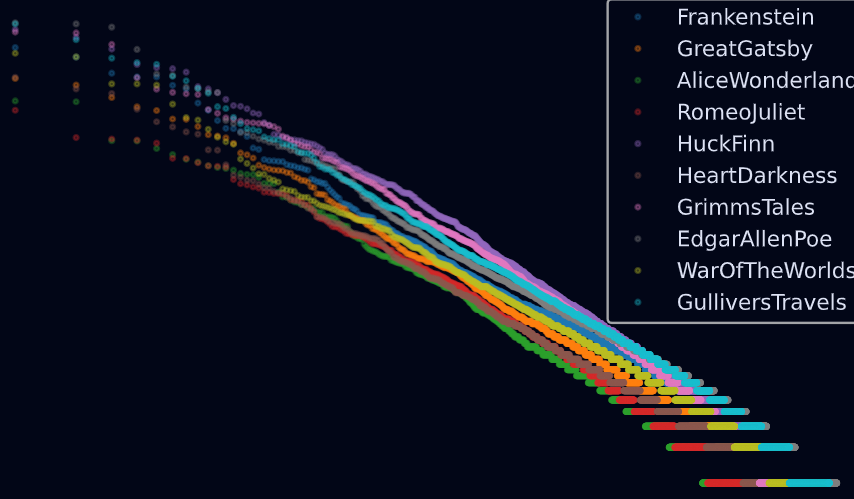

Dissecting LLMs with machine learning

Understand how large language models (LLMs) really work by applying machine learning (ML) methods to their internal activations. Each post explores how LLMs process text, isolate patterns, and generate new outputs. You’ll learn how to probe, manipulate, and explain model internals. Every article includes a complete Python notebook so you can reproduce the results, visualize the mechanisms, and extend the experiments further.

Each post is 1000-2000 words and takes 5-10 minutes to read (could take longer if you go through all the code in depth).

Each post is 1000-2000 words and takes 5-10 minutes to read (could take longer if you go through all the code in depth).

I take a different approach to how most people introduce LLMs. Instead of just writing about it and how you understand, I use machine-learning techniques (statistical analyses and visualizations) to investigate and reveal the internal dynamics of language models.

All of the code files for the posts are on my Github site.

- Drawing text heatmaps to visualize LLM calculations

- GPT breakdown 2/6: Logits and next-token prediction

- GPT breakdown 5/6: Attention

- Effective dimensionality analysis of LLM transformer blocks

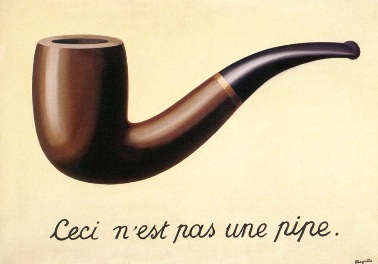

Mike's musings

A collection of meaningless meanderings. Perhaps you find them amusing, thought-provoking, or boring.

These are short posts, generally 700-900 words. They're some loose thoughts that perhaps you will find interesting. Non-technical. Borderline philosophical. Not to be taken too seriously.

- This is not a post

- The cost of wine tasting

- Strunk and White's Elements of (Life)Style